Smart Extended Reality Lab (smartXR-Lab)

Welcome to our state-of-the-art lab in Medical Mixed Reality (MMR) from the AI-guided Therapies group (https://ait.ikim.nrw) of the Institute for Artificial Intelligence in Medicine (IKIM), where cutting-edge technology converges with healthcare innovation. As we usher in a new era of medical advancements fueled by artificial intelligence, we seamlessly integrate the power of mixed reality to revolutionize patient care, training, and research. Our immersive lab environment combines virtual reality, augmented reality, and the expertise of medical professionals, transcending the boundaries of traditional medicine. Our lab serves as a dynamic hub for exploration, discovery, research, and collaboration. Leveraging Artificial Intelligence, medical mixed reality, IT infrastructure, GPU clusters, and strong medical networks, we are at the forefront of groundbreaking advancements.

We invite students, enthusiasts, and companies to join forces with us for exciting internship and collaboration opportunities. Contact us today to embark on this transformative journey.

Equipment

Equipped with advanced tools, our MMR-Lab empowers unique and innovative research for patient care. We have the following devices:

- Structured Light 3D Scanners (Artec Leo and AutoscanInspec)

- 3D Printers (CREALITY SLA Printer)

- Mixed/Augmented Reality Glasses (HTC VIVE PRO 1 & 2, Varjo XR-3, Meta Quest 3, Microsoft HoloLens 2, and Apple Vision Pro)

- Electromagnetic Tracking Systems (NDI Aurora and NDI 3D Guidance trakSTAR)

- Optical Tracking Systems (OptiTrack with PrimeX41 cameras and NDI Polaris Vega),

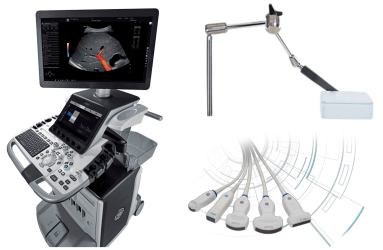

- Ultrasound Scanner (GE Healthcare Ultrasound Scanner Logiq E10)

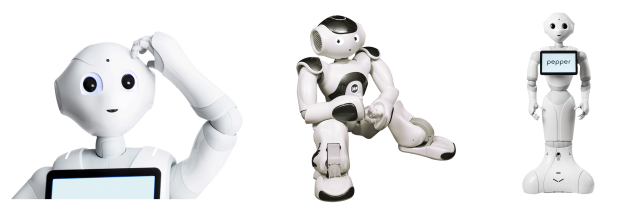

- Robots (NOA and PEPPER)

- Phantoms (Karstadt models and cranio-maxillofacial surgical phantom)

Presentation of the newly opened XR-Lab during the symposium of the Zentrum für virtuelle und erweiterte Realität in der Medizin (ZvRM)

Structured Light 3D Scanners

Introducing our cutting-edge 3D scanning technology: Artec Leo and Autoscan Inspec. Artec Leo is a handheld scanner known for its ease of use and real-time capabilities, allowing you to quickly capture detailed 3D models with high precision. It’s perfect for scanning large objects like limbs or even entire rooms.

Autoscan Inspec, on the other hand, is a tabletop scanner designed for industrial quality control and inspection. With its high-precision scanning capabilities, it ensures accurate measurements and analysis of complex and small surgical instruments, such as tweezers, scissors, or blades.

Both scanners use structured light technology, making them safe to use on human subjects. Additionally, we provide 3D scanning spray for highly reflective and shiny objects to optimize surface scan quality. Experience the power of our advanced 3D scanning technology today.

3D Printer

Introducing the SLA Resin Creality Printer, built with advanced Stereolithography additive (SLA) printing technology, this printer offers exceptional precisn and detail in creating high-quality 3D models. Stereolithography (SLA) is an additive manufacturing process utilizing UV light to selectively harden the resin layer-by-layer and can print parts with small features, tight tolerance requirements, and smooth surface finishes. This printer allows us to make replicates of our 3D structured light scanned objects, CTA/MRI based surface models, Manually created cutting guides or AI generated patient specific implants. This allows us to bring our ideas to life accurately and within days. For even more rapid prototyping we plan to permanently install an FDM printer as well.

Mixed and Augmented Reality Glasses

Our MMR Lab provides a wide range of cutting-edge mixed reality devices to enhance your experience in multi-user applications. In our lab, we offer various mixed reality devices, each with its unique capabilities. Mixed reality entails the fusion of the real and virtual worlds, achieved through two approaches: immersive optical-pass-through (mixed reality) and optical-see-through (augmented reality). By seamlessly combining real and virtual elements, mixed reality takes us beyond the capabilities of virtual reality.

Our lab features multiple HTC VIVE Pro 1 and 2, which utilize base station infrared scanners for user tracking, while the head-mounted display (HMD) captures the surrounding environment. These devices allow for superimposing filmed and registered reality onto the virtual world. For an even more immersive experience, we offer the high-end Varjo XR3 mixed reality device. Unlike other devices, the XR3 does not require a base station and also incorporates hand tracking technology, providing an unparalleled blend of both realities.

Besides the HTC VIVE we have acquired 16 Meta Quest 3 Mixed Reality headsets, which are versatile, wireless, and have a great price/performance ratio, making them ideal for development and research in a clinical setting.

Additionally, we have 10 HoloLens 2 (HL2) devices, which offer optical-see-through functionality. With HL2, users experience minimal discomfort typically associated with mixed reality devices, as the real world is still perceptible through their eyes. These wireless devices include hand tracking, simultaneous localization and mapping technology, and an onboard computer, ensuring a seamless integration of both worlds.

Our latest addition consists of two Apple Vision Pro units, allowing us to stay on top of the latest developments. This allows us to develop for Android, Microsoft and Apple based devices.

At our MMR Lab, we strive to provide you with the latest mixed reality devices to enrich your view and unlock limitless possibilities for medical research and development.

ElectroMagnetic Tracking systems (EMT-system)

Our Mixed Reality Lab is proud to offer the NDI Aurora and 3D Guidance trakSTAR systems, capable of generating an electromagnetic field and measuring changes within this field, thereby proving 3D tracking of devices based on these changes. These cutting-edge tracking solutions are designed to revolutionize the way medical instruments are tracked and visualized in real time, now also in 3D at our MMR lab. The Aurora and 3D Guidance trakSTAR electromagnetic tracking solution provides seamless and real-time tracking of micro sensors embedded in a wide range of medical instruments, including ultrasound probes, endoscopes, catheters, guidewires, and even the tip of a needle, enabling precise tracking of instruments through complex anatomical structures. When integrated into original equipment manufacturer image-guided surgery or interventional systems, the Aurora acts as a vital link between patient image sets and the 3D space. It instantly localizes the relative positions and orientations of instruments within the operative field. Enhance your mixed reality projects with unparalleled tracking capabilities and take your medical simulations and interventions to the next level. We plan on incorporating the devices with our mixed and augmented reality devices, frequent use for assesment of stand alone tracking systems and to seamlessly intertwine our research with the latest hospital standards.

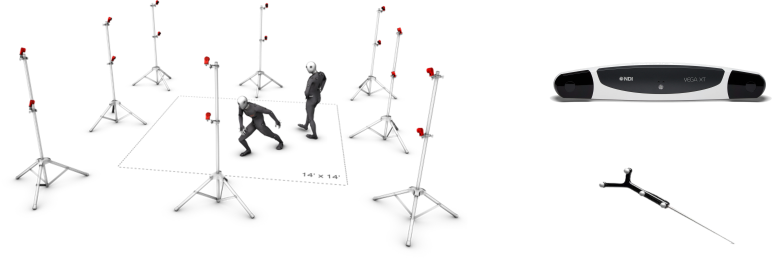

Optical tracking systems (OTS)

Introducing Optical Tracking Systems (OTS) for Advanced Motion Capture in Mixed Reality. We are thrilled to present our NDI Polaris Vega and OptiTrack System with the latest PrimeX41 cameras. These systems track spherical infrared-reflective markers by triangulation calculations between at least two cameras. These state-of-the-art tracking solutions are designed to revolutionize motion capture within our lab and elevate the quality of your mixed reality projects. The NDI Polaris Vega, a portable system renowned for its high accuracy, provides seamless and real-time tracking of movement. In addition to the Polaris Vega, we offer the OptiTrack System with PrimeX41 cameras, known for their exceptional performance and reliability for live motion capture. These cutting-edge cameras capture live motion within our MMR room, delivering submillimetre accuracy and precision. With the OptiTrack System, every subtle movement is captured with remarkable fidelity, allowing for seamless integration of real-world motion into your mixed reality projects. Whether you’re conducting medical simulations or engaging in interactive experiences the OTS provides a powerful toolset for motion analysis, enabling you to capture and visualize movements with remarkable realism. Whether you’re exploring virtual environments, conducting research, or developing innovative applications, our Optical Tracking System empowers you to push the boundaries of motion capture in mixed reality.

Ultrasound Scanner

Introducing the GE Healthcare Ultrasound Scanner Logiq E10, as the newest addition to our arsenal, this cutting-edge device is poised to revolutionize medical imaging. Set to release its API next year, the Logiq E10 will be able to integrate with our Artificial Intelligence and Mixed Reality solutions, as well as our advanced tracking systems. This integration will unlock new dimensions of interactive visualization, diagnostic accuracy, and real-time guidance, empowering medical professionals like never before. Equipped with an electromagnetic tracking system for the ultrasound probe, the Logiq E10 ensures precise and accurate imaging during procedures. Its limited 3D scanning and CT/MRI fusion capabilities serve as a solid foundation, which we aim to enhance and expand in the projects to come. Additionally, needle tracking and a multitude of other features await exploration, opening doors to innovative applications and improved patient care. We want to be at the forefront of the future of ultrasound technology with the GE Healthcare Logiq E10.

Stay tuned as we embark on an exciting journey to explore the untapped possibilities of the Logiq E10. Witness the convergence of advanced ultrasound imaging, Artificial Intelligence, and Mixed Reality, as we strive to reshape the landscape of medical diagnostics and interventions. Together, we will shape the future of healthcare.

Aldebaran humanoid robots

Meet NAO and Pepper, our Aldebaran humanoid robots, creating an unique experiences in our Mixed Reality Lab. NAO’s expressive movements and programmable behavior make it ideal for research, education and entertainment. Pepper, our social humanoid, excels in interactive engagement with advanced speech recognition and environmental awareness. Together, they are excelent for research regarding human-robot interaction, patient education, and personalized care. Unfortunately, ongoing projects are limited due to manpower availability. Join us in our lab and let NAO and Pepper guide us towards the future of medical technology. Together, we can explore the endless possibilities of these remarkable robots in revolutionizing healthcare and human-robot interaction.

Practice phantoms

Projects come to life with the help of our phantoms. These models serve as the foundation for developing, training, and showcasing our innovations. With our 3D printers, we can create (miniature) models for our research. However, we frequently use our larger-scale Karstadt models, Ms. and Mr. White, as well as our high-end cranio-maxillofacial surgery phantom from GTsimulators. These phantoms provide an invaluable platform for developing and demonstrating our medical Mixed Reality.

To further elevate our research, both Mr. White and our surgery phantom have undergone high-dose CT scans, enabling us to add a medical component to our Mixed Reality.Looking ahead, we are committed to expanding our repertoire of phantoms, particularly in the realm of ultrasound applications. By integrating these accurate phantoms with our Mixed Reality devices and tracking systems, we can unlock new dimensions of interactive exploration, enhanced visualization, and innovative medical solutions.

Example projects at our SmartXR Lab

Some of our current projects involve markerless patient registration, 3D visualization of CT data and models directly within the patient, multi-user learning experiences, 3D databases (some of which are publicly available at https://medshapenet.ikim.nrw), inside-out tracking of patient and surgical instruments, and ongoing work on an augmented reality-assisted US-scanning approach with artificial intelligence support. Our vision encompasses a promising future where we bring visibility to the previously imperceptible, illuminating the unseen.

While we cannot showcase all our current projects online, we are excited to share a glimpse into our work.

3D visualization of CT and segmented models

This AR technique enables direct visualization of CT data and models within the patient, facilitating the translation from 2D CT slices to a 3D representation for enhanced diagnosis and treatment planning.

Gsaxner C, Pepe A, Li J, Ibrahimpasic U, Wallner J, Schmalstieg D, et al. Augmented Reality for Head and Neck Carcinoma Imaging: Description and Feasibility of an Instant Calibration, Markerless Approach. Computer Methods and Programs in Biomedicine 200, 105854 (2021). Article

Markerless patient registration

We have developed an automated markerless registration method for seamlessly integrating CT data and segmented models into the patient. This method addresses the switching focus problem, providing surgeons with direct 2D to 3D mapping of CT data and enabling surgical navigation in a cost-effective manner.

Gsaxner C, Pepe A, Wallner J, Schmalstieg D, Egger J. Markerless Image-to-Face Registration for Untethered Augmented Reality in Head and Neck Surgery. In 2019. p. 236–44. Article

Instrument tracking

Using the sensors of the HoloLens 2, we have achieved highly accurate instrument tracking through infrared reflective markers. By combining this technology with our previous advancements, we offer an out-of-the-box solution for surgical navigation, eliminating the need for additional expensive equipment. An intersting follow up research would be the less acurate but intersting free form segmentation and tracking, i.e., without the need of infrared reflective markers.

Gsaxner C, Li J, Pepe A, Schmalstieg D, Egger J. Inside-Out Instrument Tracking for Surgical Navigation in Augmented Reality. In: Proceedings of the 27th ACM Symposium on Virtual Reality Software and Technology [Internet]. Osaka Japan: ACM; 2021 [cited 2023 Apr 12]. p. 1–11. Article

Multi-User Learning Experiences

Collaborative learning takes center stage in our research, as we create immersive multi-user experiences that foster interactive education and training for medical students. It could also be leveraged to enable multidisciplinary teams to discuss a patient’s treatment plan, without the need to be in the same room.

3D surgical tool collection

With our structured light 3D scanners, namely the Artec Leo and Autoscan Inspec, we have scanned over 100 instruments commonly used in clinical routines. This extensive collection serves various purposes, including general instrument detection and tracking in operating room settings, as well as medical simulation or training scenarios in virtual reality and mixed reality. These surface models can be enhanced to achieve realism using shaders available in popular game engines like Unity3D or through 3D modeling software such as Blender.

Luijten G, Gsaxner C, Li J, Pepe A, Ambigapathy N, Kim M, et al. 3D surgical instrument collection for computer vision and extended reality. Sci Data. 2023 Nov 11;10(1):796. Article

HINTS Eye Tracking Project

Dizziness is a common complaint in clinical practice, with causes ranging from benign vestibular disorders to life-threatening central etiologies, particularly stroke. Rapid identification of the underlying cause of dizziness is critical for timely intervention and treatment. Using the HoloLens 2’s built-in eye tracker from the Extended Eye Tracking GitHub, we attempt to diagnose the cause using traditional and machine learning methods. This project can provide objective and excellent diagnosis, thus improving or even replacing the current HINTS exam. Future projects focus on bringing this project out of the hospital and into the pocket of our patients to have help with quick diagnosis anytime, anywhere.

DREAMING Challenge

Exploring the potential of Diminished Reality (DR) in medicine, a modality that removes real objects from the environment by virtually replacing them with their background. DR holds huge potential in medical applications, providing surgeons with an unobstructed view of the operation site in scenarios where space constraints or disruptive instruments hinder visibility. We hosted a challenge for ISBI 2024. Registered at Zenodo Dataset available at [https://zenodo.org/records/10570773] Find out more on our dreaming challenge website

Example output video below:

Baseline paper example:

van Meegdenburg T, Luijten G, Kleesiek J, Puladi B, Ferreira A, Egger J, et al. A Baseline Solution for the ISBI 2024 DREAMING Challenge. In: Proceedings of the IEEE International Symposium on Biomedical Imaging (ISBI) 2024

Example of the winners of the best method

Wu ZJ, Seshimo R, Saito H, Isogawa M. Divide and Conquer: Video Inpainting for Diminished Reality in Low-Resource Settings. In: Proceedings of the IEEE International Symposium on Biomedical Imaging (ISBI) 2024.

MedShapeNet

We introduce MedShapeNet, a collection of medical shapes such as surgical instrument, human anatomy and pathology and more for medical applications in the fields of machine learning, computer vision and extended reality. Derived directly from real-world data, MedShapeNet offers over 100,000 shapes across 23 datasets, paired with annotations. Accessible via web interface and Python API, it supports various image processing tasks and applications in virtual, augmented, and mixed reality, as well as 3D printing. Explore at: MedShapeNet

Some example usecases below, introducing MedShapeNet

Interested ?

If you are intrigued by one of our ongoing projects or have a vision to initiate a new project by combining our extensive range of equipment with AI solutions, we encourage you to reach out to us. We welcome opportunities for (master) internships and collaborations. Some examples of potential projects include mixed reality visualization of needles and instruments using our advanced tracking systems, tackling free-form stand-alone tracking challenges with our cutting-edge headsets, developing applications directly on the GE-ultrasound device, or creating mixed reality applications that can model AI-generated patient-specific models prior to 3D printing rapid prototypes. If you have a project idea of your own, we are here to empower you to make valuable contributions to the field. Don’t hesitate to get in touch with us.

See the contact form section and e-mail me directly!

Assistent NAO

Hi my name is NAO, the site is currently under construction, as our smart medical extended reality lab is growing fast. Did you find anything of interest, let us know via the contact form or contact my supervisor directly with his e-mail listed below.

Kind regards, NAO.

Contact

Master students are encouraged to mail when interested in an internship.

- Gijs.Luijten@uk-essen.de

- Institute for AI in Medicine, Giradet Straße 2, 45131, Essen, Germany

- IKIM-UME